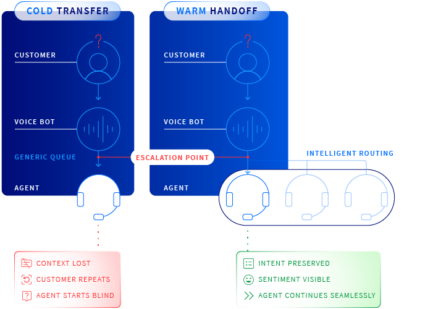

Here’s what a bad AI-to-human handoff looks like:

- Conversation history vanishes

- Agent asks customer to start over (or repeat)

- Transfer dumps customer into a generic queue (more repeating)

It happens a lot more than most companies care to admit, but AI itself usually isn’t the problem. Most AI voice or chatbots understand the question and can handle the initial interaction for which they’re intended. The breakdown happens at the transition point. This is the moment a customer issue exceeds what in-place automation can resolve and requires human judgment.

This is the work of escalation design: how service teams engineer what happens when AI reaches its limits. It’s not glamorous. But it can mean the difference between AI investments that deliver measurable ROI and expensive pilots that fizzle out.

What is Escalation Design in Customer Support?

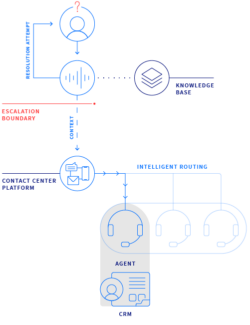

Escalation design defines when to transfer a customer, what information travels with the customer, and how well agents can pick up the thread without missing a beat. Think of it as the safety net that lets automation scale: the 80% that your AI handles only works if the 20% requiring humans is designed just as carefully.

The Handoff Is the Hidden Failure Point

The conversation about AI in contact centers tends to focus on automation capabilities: what percentage of inquiries the bot can handle; how much faster it resolves routine questions; how many agent hours it saves. These are core metrics, certainly. But they don’t always speak to a more fundamental source of friction: how escalation pathways are designed and optimized.

The numbers suggest that the escalation problem is widespread. According to Cisco, one in three agents lack the customer context needed to deliver ideal experiences. A Qualtrics study from October 2025 found that nearly one in five consumers who used AI for customer service saw no benefit. That’s a failure rate four times higher than AI use in general.

Two failure patterns show up repeatedly.

- The amnesia problem. A customer spends five minutes explaining their situation to a chatbot. The bot determines that the issue requires human attention and initiates a transfer. The agent picks up with no record of the prior conversation. The customer repeats everything from the beginning. We have all been there.

- The cold transfer. The handoff happens without warning, context, or guidance. The customer lands in a generic queue. When an agent finally picks up, they have no idea why this person is calling, what’s already been attempted, or what outcome the customer expects.

In both scenarios, it’s the handoff that’s failing. And the handoff is where customer trust can take a significant hit.

What Klarna Learned: From AI-First to Escalation-Aware

Klarna’s AI implementation became one of the most discussed case studies in customer service automation. Both its aggressive initial approach and subsequent pivot are instructive.

Klarna’s automation move:

- AI assistant handles 1.3 M conversations/month, equivalent work of ~800 FTEs

- Resolution time: 2 minutes vs. 11 minutes with human agents

- 25% reduction in repeat inquiries

- Customer satisfaction scores on par with human agents

The headline numbers were striking. The move seemed to be a success.

Then the company’s approach evolved.

In the media, CEO Sebastian Siemiatkowski acknowledged that the company had over-weighted cost savings in its initial approach, and that prioritizing efficiency had come at the expense of quality in certain scenarios. He also pointed to a deeper issue: Existing customer service infrastructure—IVRs, FAQs, knowledge bases—hadn’t been strong to begin with.

The AI was built on a foundation that already had gaps. This required technical fixes that centered on escalation infrastructure that Klarna had initially underinvested in. For example, Klarna:

- Upgraded AI to summarize interactions before handoff, ensuring agents received full context

- Introduced confidence scoring: if the AI wasn’t certain that it could resolve an issue, it escalated to a human rather than guessing and getting it wrong.

The result was that AI began handling more Level 2 support, not less, because the handoffs were cleaner, and the boundaries better defined.

Even the most aggressive AI-first implementation shows that escalation pathways require as much engineering rigor as the automation itself. For Klarna, the pendulum didn’t swing back to “humans do everything”. It swung toward a hybrid model where the transitions are as carefully designed as the endpoints.

What Good Escalation Design Actually Looks Like

Leading contact center solutions now center escalation pathways—context summaries, intelligent routing, seamless handoffs—in their core product positioning.

Salesforce Agentforce Voice (announced Oct 2025):

Salesforce’s Agentforce Voice emphasizes what they call “seamless human handoff” with full conversation history and context preserved. Escalation triggers are configurable through Agentforce Builder, and the system integrates with Omni-Channel for skills-based routing. That means customers get transferred to agents with relevant expertise, not just whoever is available.

Cisco Webex AI Agent:

Cisco’s Webex AI Agent takes a similar approach: Context Summaries facilitate handoffs from AI to human agents; Mid-call Summary, released in Q4 2025, provides instant context during transfers, so agents don’t start cold; and Wrap-up Summaries auto-generate interaction records.

Cisco reported that Webex AI Agent reduced call escalations by 85 percent for one large equipment rental customer. It did so not by preventing escalations entirely, but by handling more issues autonomously because the escalation pathway was trustworthy when needed.

What Salesforce and Cisco reveal about escalation design:

The pattern across both platforms points to a common set of escalation design elements:

- Full conversation transcript transfer: Everything the customer said, everything the AI attempted, every piece of information already collected.

- Sentiment and intent detection passed to the agent: Not just what was discussed, but how the customer was feeling and what they’re actually trying to accomplish.

- Skills-based routing that considers escalation context: Matching customers to agents based on the specific nature of their issue, not just queue availability.

The Engineering Challenges Nobody Talks About

Escalation design requires cross-system integration, real-time data flow, and deliberate UX decisions that most implementations overlook. This is part of why AI failure rates in customer experience remain stubbornly high.

The statistics paint a challenging picture:

- 74 percent of enterprise CX AI programs fail to deliver (E-Globalis)

- Only one in four AI projects delivers on promised ROI (IBM)

- 42 percent of companies abandoned most AI initiatives (S&P Global)

- 40 percent of agentic AI projects may be cancelled by end of 2027 (Gartner)

These numbers reflect many failure modes, but in our experience, escalation design contributes disproportionately to the gap between pilot success and production disappointment. These gaps present difficult challenges for engineering teams to solve:

- CRM integration gaps mean agents don’t see customer history when calls transfer

- Knowledge base disconnection means handoff summaries don’t include what solutions were already attempted

- Ticketing system silos mean case creation happens after the transfer rather than during, losing context in the transition

- Latency issues that are tolerable in chat become unacceptable in voice conversations

- Legacy system constraints mean transcripts are incomplete when calls forward from existing IVR infrastructure.

The Commonwealth Bank of Australia Story

Commonwealth Bank of Australia learned this lesson publicly in August 2025. Australia’s largest bank rolled out an AI voice bot to handle routine customer queries, claiming it reduced call volumes by 2,000 per week.

Based on those numbers, the bank cut 45 customer service roles.

The Finance Sector Union challenged the data. Their members reported the opposite reality: call volumes were actually rising. Staff were offered overtime. Team leaders were pulled onto phones to handle overflow. The bot was creating escalations rather than reducing them.

Within weeks, CBA reversed the redundancies and publicly apologized. A spokesperson conceded that the bank “did not adequately consider all relevant business considerations”.

The lesson is instructive. Automation metrics can look good on paper while escalation pathways quietly fail. CBA’s bot may have handled simple queries effectively, but without effective handoffs for anything complex, the human workload increased rather than decreased. The AI worked. The system surrounding it didn’t.

Gartner’s Prediction and the Operational Reality

The trajectory of AI in customer service points toward increasing autonomy. Gartner predicts agentic AI will resolve 80 percent of common customer service issues without human intervention by 2029, driving 30 percent reductions in operational costs. By 2028, they expect 70 percent of customer service journeys will begin and end with third-party conversational assistants.

The pressure to move in this direction is already acute. A Gartner survey from April-May 2025 found that 77 percent of service leaders feel pressure from executives to deploy AI. Eighty-five percent of customer service leaders plan to explore or pilot customer-facing conversational GenAI in 2025.

But Gartner’s guidance on implementation is notably focused on boundaries, not just capabilities. Their recommendations include defining AI interaction policies that explicitly address escalation and revising service models to handle AI-driven volume while implementing dynamic routing that differentiates between human and AI interactions.

The Implementation Partner Question

The gap between AI capability and AI success is implementation. This is particularly true for escalation pathways, which require deep platform expertise and cross-system integration that spans contact center infrastructure, CRM, knowledge management, and workforce routing.

What separates implementations that work from those that don’t often comes down to whether the team building the system has thought through what happens when AI can’t handle something.

When evaluating an implementation partner, several factors matter more than vendor certification badges or case study counts:

- Experience with both telephony/contact center and CRM integration: Not just one or the other, but the intersection where escalation actually happens.

- Understanding of escalation threshold configuration and testing: Knowing where to set the confidence boundaries and how to tune them based on real interaction data.

- Track record with context transfer across systems: Across the legacy infrastructure that most enterprises actually have.

- Ability to design “warm welcome” handoff experiences: The agent greets the customer by name, acknowledges the issue, and picks up where the AI left off rather than starting from zero.

The questions to ask are specific:

- How do you handle context preservation when calls transfer from legacy IVR?

- What’s your approach to escalation threshold tuning post-deployment?

- How do you test handoff scenarios before go-live?

- What visibility do agents have into AI conversation history?

The answers reveal whether a partner has actually solved these problems in production or is learning your implementation.

Escalation design isn’t the most exciting part of AI deployment. It doesn’t generate headlines (unless it fails) or impress boards with futuristic demos. But it’s increasingly where contact center AI succeeds or fails in practice.

The organizations getting this right aren’t the ones with the most sophisticated automation. They’re the ones who’ve invested equal rigor in what happens at the boundaries. That is, when AI reaches its limits and a human needs to step in without the customer noticing the seam.

Get Escalation Design Right

Bucher + Suter specializes in the engineering that makes AI work in production: Cisco and Salesforce integration, escalation threshold configuration, context transfer across legacy systems, and the “warm welcome” handoff experiences that keep customers from starting over. Contact us today.